Table of Contents

Yes, this is yet another post written by a disgruntled human on the internet complaining about ChatGPT and its infiltration into seemingly every fiber of daily life. I'm sure you've all seen similar posts, but none of them are written by me, so I'm here to air my thoughts into the world. This is by no means a great think piece. I have my opinions on ChatGPT and other GenAI of the likes, but I've never really vocalized them before. So, I'm using this as an opportunity to comb through my thoughts.

I want this post to be less of a rant (i.e., not overtly negative in tone) and more of an analytical piece picking apart how ChatGPT has manifested itself in my personal life. With that being said, enjoy!

AI, AI, it's seemingly everywhere now!

But the worse offender has to be ChatGPT.

It's not that I never noticed how normalized GenAI has been in the past year or so, but I guess it never fully sank in how it's going to be like this from now on and into the foreseeable future. GenAI is here to stay and I'm a little scared.

There were 3 particular incidents that happened recently that made me realized just how normalized it has become for people to use ChatGPT in, well, basically everything!

Incident #1

I'm currently in my second year of college, living in a dorm managed by the university. Our lovely RA has taken the time to decorate our hall with posters related to our hall theme, techwave. Well, the thing is, most of the posters are AI generated images (that are also extremely low quality for some reason, but that's besides the point). Every day, I walk pass an AI image of flying cars with the caption FLYING CARS (in all caps, yes) printed on a letter-sized paper. I almost want to laugh at how absurd the situation is. Is this what the future of tech is about? Databases trained off of stolen artworks? The aesthetics of the death of human-made art, replaced by slop spit out by a lifeless machine? I'm also very baffled, because for a theme as generic as techwave—which could be anything related to technology and futurism—it couldn't possibly be that hard to find non-AI images to use! I do not assume this is done out of malice; I'm sure my RA has the nicest intention, wanting to liven up our living space a little, but I can't help but feel disappointed. If anything, this is a reflection of how acceptable it has become to use AI-generated images in casual settings: home decor, memes, AI song covers... all that jazz. I miss human-made stock images.

Incident #2

I was with a couple of second-years, talking to two third-year students about a philosophy/social sciences class we're taking. For context, this was at an event, and we didn't know each other until like an hour ago. Both third-years had taken the class before, and they attest that there's a lot of assigned reading. And then one of them drops this bombshell of a sentence:

Just have ChatGPT summarize it for you, that's what I did.

Not the exact quote because my memory is fuzzy, but it's more or less what was said. The reception? Approving nods and mm-hmms from the other folks in the group. Oh, I remember so clearly in that moment I had the biggest smile on my face—the nervous laugh kind of smile. "Holy shit we're fucked" was my honest reaction. No wonder people say media literacy is dead. I know people hate assigned readings, especially philosophical texts and research papers that are hard to decipher. Heck, it's not like I always enjoy them either! But holy shit. Why would you do this to yourself? I can't imagine how much relying on ChatGPT to summarize "hard" texts for you fucks with your reading comprehension. It's quite literally handing everything to you on a platter, without you having to any work to mentally challenge yourself. I so, so wanted to voice my concerns then, but alas I kept my mouth shut. To keep the peace, I suppose, but also what could really be accomplished? It's unlikely that I can change their minds. This is the new normalcy, and I despise every bit of it.

Incident #3

During my first discussion section of the philosophy/social sciences class, my TA straight up suggested that to better understand a particularly hard-to-read philosophical text, we can "have a conversation" with ChatGPT about it. At the moment, I remember thinking:

Wow, endorsing the use of ChatGPT in a humanities class... that's certainly something.

And then it hit me: this is not the first course I've taken that has officially endorsed or encouraged the use of ChatGPT. I guess it's just assumed that everyone uses ChatGPT now so you might as well incorporate it into the curriculum somehow. It's such a whiplash having taken a humanities writing course last year that strongly discouraged the use of generative AI. At least the TA discouraged the use of ChatGPT as a summarizer tool, and rather have us as it question instead, feigning some kind of intellectual discussion in lieu of a human mind. But I really don't know what difference that can make.

// The Prevalence of ChatGPT in College/University

Every time I go into my school's library, I'm bound to walk past someone dual-wielding ChatGPT and their homework. I get it, it's the easy way out. Sometimes Google can't give you the exact answer that you're looking for, but you know who could? ChatGPT babyyy. And I think that's its biggest allure:

It's easy as hell to use ChatGPT.

It's easily accessible. It's free. My question is, has it becomes to normalized to use ChatGPT that people do not recognize its usage as wrong? I'm not talking about wrong in the moral sense, it's more so in the violation of academic integrity sense—aka cheating. Yet another anecdotal evidence: at the tutoring center, person next to me without missing a beat said they use ChatGPT to solve the physics problems and then work in reverse. I was speechless bro how do you look at the TA dead in the eye and just admit that? To say it like it's normal and acceptable... man... what are we doing here.

I mentioned previously that I've had multiple courses where the use of ChatGPT was permitted or encouraged. I suppose you could say it's very progressive of them to embrace change, and perhaps even the inevitable. But, is this really the future we what...? In one such course, using GenAI to generate code for homework and open-notes, open-internet exams is acceptable as long as you specify in your answers that such tools were used. We did have one assignment where we had to research the cons of ChatGPT, so that's something I guess. Though I'm happy to report that most professors I've had either explicitly bans the use of ChatGPT or at least said something along the lines of "you should be the one doing the heavy-lifting of learning, not some AI".

// You can pry these em dashes from my cold, dead hands

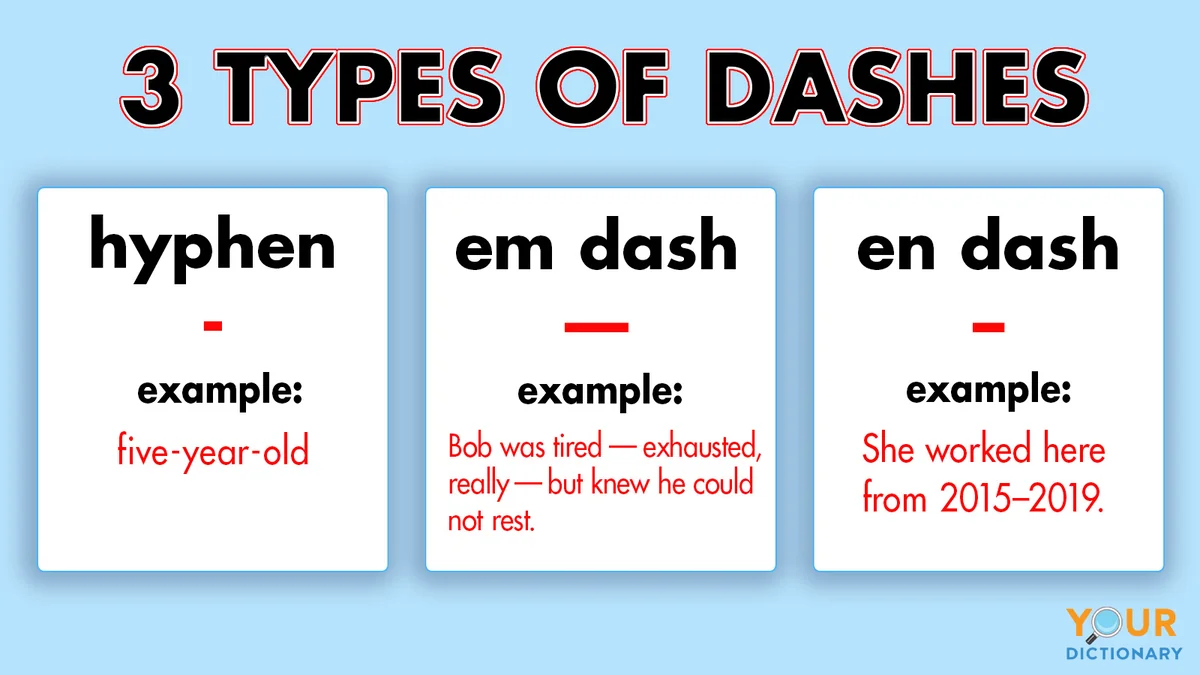

I REFUSE to let AI hijack my beloved em dash. Everyone please take a moment to appreciate the beauty of the humble en and em dashes:

Apparently using em dashes and semicolons are indicative of AI now; that sucks. I will continue using them though—they're the perfect buffers for not-so-quite pauses and subtle change of subject! Em dashes has been such an integral part of my writing style. I dunno when I started becoming attatched to it but I like to use it at every opportunity (well I try to not overuse it lol). I will NOT abandon it out of the fear of my writing being flagged as AI-generated. Besides, I'd like to think that when it comes to writing essays for classes, I have a distinct enough writing voice that the instructor will be able to tell it's me :)